Learning Tool or Teaching Threat: Students and educators talk ChatGPT

Since its November launch, ChatGPT has created a stir of controversy. Some local teachers and students can see both its benefits and drawbacks. One Lakeview High School senior says she can see how the AI tool could help with generating essay ideas, but as far as using it in place of her own writing, she says, “It would create an interesting perspective, but it would never be your own work."

Editor’s note: This story is part of Southwest Michigan Second Wave’s Voices of Youth Battle Creek program which is supported by the BINDA foundation, Battle Creek Community Foundation, and the Kellogg Community Foundation.

ChatGPT has its place in a technology toolbox that continues to fill up with newer ways to communicate and perform work, but it’s not a substitute for the human brain as some people have feared, says Chad Edwards, Professor of Communication and Co-Director of a Communications and Social Robotics Lab at Western Michigan University.

“We’ve always had moral panics about new education,” Edwards says. “The best way is to work with it and use it effectively and ethically.”

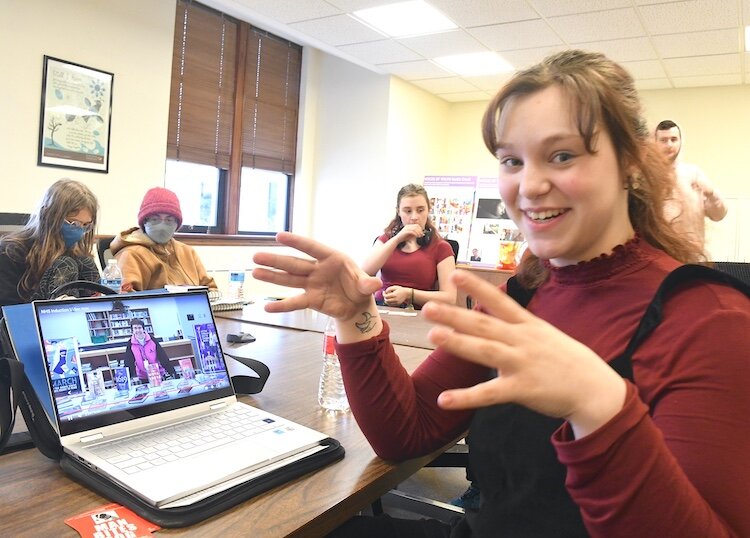

Lauren Davis, a senior at Lakeview High School who has not yet used the technology, says she thinks ChatGPT would be a good tool for brainstorming ideas for English papers and beneficial for putting ideas together or developing a hypothesis for an assignment in a science or English class.

Davis, who is taking a Broadcast Journalism class at Lakeview, is headed to Kellogg Community College after graduating this Spring with plans to transfer to Western Michigan University to complete studies in Sports Management Marketing and Media and Journalism. She also participated in the most recent Battle Creek-based Voices of Youth cohort that wrapped up earlier this month.

“It would create an interesting perspective, but it would never be your own work,” she says of ChatGPT. “In school, plagiarism is incredibly, heavily frowned upon. It’s not OK to use someone else’s words as their own. To have software built to plagiarize is incredibly not OK to use for a finished product. To have a program create an entire school project or essay is very morally wrong to me. It doesn’t make you any more aware of what the project is trying to teach you.”

Kayanna Smith, also a senior at Lakeview High School, has thoughts about ChatGPT that are similar to those expressed by Davis. An aspiring clothing designer, with a focus on using recycled materials, Smith says, “I could see myself using it as a tool for inspiration if I wanted to create something but couldn’t think of a place to start to write a pattern or come up with an idea. I do worry that it could be used as an easy way out for assignments which could perhaps lead to guidelines and rules might that might get stricter with the teacher. There may be more restrictions put in place for assignments.”

Smith, who also has not taken a deep dive into ChatGPT, says she hasn’t spoken with fellow students who have said they’ve used it outright but has already dealt with the consequences when it is not used in the way it is intended.

“Last trimester I had an English assignment that was canceled because too many students turned in papers that used ChatGPT. It was bad for those of us who wrote it without using ChatGPT because we’d already written the paper,” says Smith, also a member of the most recent Voices of Youth cohort in Battle Creek. “I don’t think it’s inherently a negative thing to use or a bad tool to have, but it should not be the first resort to making something you’re using.”

Through Twitter, Edwards says he has seen “all of the hype and a lot of concern” being expressed about students using ChatGPT to cheat or plagiarize.

“We don’t need to be in a situation where we’re banning it from the classroom. We need to be teaching the most competent and ethical ways to use it,” he says.

The majority of careers will be adopting some form of Artificial Intelligence into the work performed and this is among the many reasons it can’t be ignored, Edwards says but must be taught to students in a way that shows them how to work with it ethically and competently.

“In my AI class, we talk a lot about what a great brainstorming tool it is. If there’s something you’re interested in you can type it in and it will give you a great first step,” he says. “It’s a great way to help speed up some tasks like if you had to come up with a simple form letter for something or have an article and need a summary. I would still like them to read the article but it does provide a nice summary.”

However, the information gleaned requires a human set of eyes on it to double-check for accuracy because “GPT doesn’t actually know anything. It’s putting together associated words from its training data and has lots of data. It doesn’t know what history or the Civil War is and creates sentences based on the relative strength of these words to each other,” Edwards says.

While calling it an excellent teaching tool, he says he has concerns about the type of bias within the training data which tends to skew “white and male.”

“There are large language models based on the training model and you can only put out what you put in,” Edwards says. “If it’s sexist, biased, and racist data going in, that’s what you’re going to get. Large language models such as GPT designers, coders, and engineers have to feed it gigabytes and terabytes of massive data based on previous data. There has to be a lot of transparency in that training data so experts can go back in and look at it. They’re using too much of a white male influence.”

Open AI is not saying who is putting the text together and it is not known how much of the training data is supervised or unsupervised. Edwards says Open AI was hiring Kenyan workers and paying them $2 an hour to screen out extreme violence and graphic sex.

Like any emerging technology, he says, “We need regulations and legislation and transparency about the data and AI systems. It is proprietary data, but there are things can that can certainly open up.”

Coming alongside this is the creation of emerging careers geared toward AI. WMU offers a minor called User Experience/Human-Computer Interaction Minor (UXHN).

“That’s an excellent type of degree for students. It teaches students to be in between coders and the clients, customers, and society,” Edwards says. “They know enough about how the system works. They can talk about the user experience and how it interacts with society and work. Some people call them Prompt Engineers.”

These will be on-staff positions at companies. Edwards says a quick check of the job site Monster.com found thousands of these UXHN jobs.

“You don’t need a go-between to use it, but you do need people in companies who understand how technology works in society, the training data, and when it’s worth it and not worth it. AI has been on every CEO’s mind for the last five years, especially last year,” he says.

Getting schooled on ChatGPT

Five days after ChatGPT was launched in November 2022 by OpenAI it had 1 million issuers. “The next closest was Instagram which took three months to get to 1 million,” Edwards says. “I think an AI hype cycle happens every few years. Like any new technology, it becomes the thing everyone talks about and then it becomes part of the routine. Right now people are exploring.”

Among those people is Darcy Hassing, an English Teacher at Lakeview High School who is also an AP Literature and Yearbook advisor. She has been an educator for 23 years.

Hassing says he has been “playing around” with ChatGPT since December.

“I blew it off at first thinking it can’t be that good. Then I got online and played around with it,” she says.

Among the things she discovered is that the technology will produce a poem written in a certain tone as requested by a user and can re-write an email with a more diplomatic voice. She says it could be beneficial for students who struggle with writing or international students because it provides a starting point and visual model of how words and sentences should be structured.

She says it also is a good tool for teachers, especially for those who create resources such as a unit on Mythology that other teachers can purchase and use.

“I’m in chat groups online and some teachers have said they can do lesson plans with state standards using ChatGPT. It doesn’t give you the full lesson plan and you still have work to do. You still have to have the material and do the creating,” Hassing says. “If I create a lesson plan, I can upload and sell it. Teachers buy from other teachers all the time, but they never once claim it as their own.”

Edwards says his department is constantly running studies to gauge how individuals view pieces written by ChatGPT versus a human being.

“If ChatGPT writes an essay versus a person, do they judge it differently based on trust, credibility, liking, and communication competence,” he says. “I’m positive that students who are using it just straight up will get stuff wrong because the information is a little dated. A skilled professor or teacher could read the difference between a student essay and a ChatGPT-generated essay.”

Hassing says she has found AI lacking the voice and passion that someone would convey in their writing which is a sign of work generated by AI.

“It gets the point across but it’s stale,” she says. “I could basically take an essay prompt for whatever I’m working on. I teach AP so sometimes I’ll put in a College Board AP prompt and say write an essay for blah blah blah and put in all of the information and it generates an essay, but it’s not good. There are limitations to what it can and can’t do.”

Essay writing, Davis says, “is one of the most hated things to do in school. Any cheat code students find they will use. I think this makes students incredibly lazy and unaccountable and there’s a lack of creativity and thought.”

Students in Hassing’s classes are doing more in-school, handwritten work as one way to get around the use of ChatGPT.

“Kids are going to do what they’re going to do,” Hassing says. “We have assignments set up in certain ways so kids have to have elements that we teach them that Chat is not going to pick up. The big thing it gets back to is claiming that work as your own. So when a teacher assigns something they’re expecting you to do the work. I need a student to write an essay because I need to assess their skills. I can’t do this if what they claim to have written isn’t really their work.”

Lakeview is among school districts throughout the United States that use Turnitin, a technology company that can identify when AI writing tools such as ChatGPT have been used.

In a 2019 letter to U.S. Senators discussing its privacy practices, Turnitin leadership said that since the company began operating 20 years ago it has served over 15,000 educational institution customers and approximately 34 million high school and higher education students throughout the world.

“We provide products and services that help students develop skills they need to think critically and take ownership of their work,” the letter says. “Central to our operation is a suite of technology solutions that identify plagiarism in students’ written work, facilitate constructive instructional intervention, and help maintain educational institution standards.”

There are a lot of detectors out there like this and more are being developed, Edwards says.

“Educators need to be a little more creative. If they’re asking a question that ChatGPT can answer, they’re asking the wrong question of students, because there’s not enough critical reflection and new stuff,” he says. “You’re asking old and easy questions, questions that a machine could put together as well as students. If generative AI can do it, maybe you’re asking the wrong questions.”

When it comes to writing, Hassing says she teaches certain things a certain way. “We need to make assignments unique so they can’t be done on AI.”

Ultimately, Edwards says, “People will adapt how they grade and have essays in college and high school and find new ways of doing it. I would say don’t be scared of generative AI, but be mindful of it.”